Our team of experts is ready to assist you with your integration.

Mach5 revolutionizes search infrastructure with its cutting-edge architecture, establishing a new benchmark in performance and efficiency compared to conventional solutions like OpenSearch and Elasticsearch.

Mach5 introduces an innovative disaggregated storage architecture, which sets it apart from traditional cluster-based implementations with tightly coupled storage and compute. This architectural advancement delivers three transformative benefits:

In this blog, we will examine how Mach5 delivers substantial cost benefits while ensuring better performance compared to OpenSearch and Elasticsearch.

Object stores have become the de facto solution for businesses and individuals seeking to park large volumes of data. They offer:

In the following sections, we will examine how each of these cost drivers impacts the performance and efficiency of a tightly coupled Elasticsearch/OpenSearch architecture compared to Mach5's disaggregated architecture.

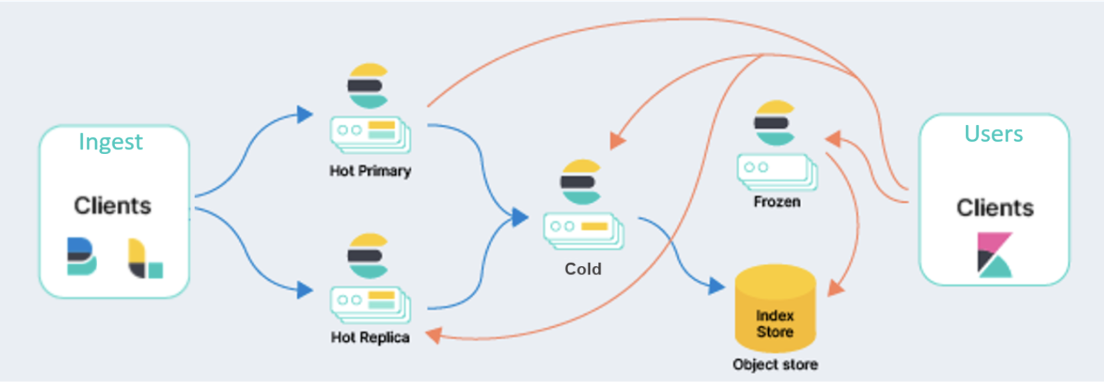

Traditional architectures leverage a distributed cluster model, where data is partitioned into shards and distributed across multiple nodes. Each node manages both the storage and processing of its assigned data. However, due to the tight coupling of compute and storage, scaling one resource necessitates scaling the other—even when only one component requires additional capacity.

This architectural rigidity introduces significant challenges for modern workloads, especially when demand patterns are unpredictable or highly variable. To understand the implications of this design, let’s examine its impact on the key cost drivers:

The architecture requires nodes to be allocated based on anticipated peak usage, creating two significant challenges:

This creates a major operational and financial challenge in environments with variable workloads, particularly when there's a substantial gap between average and peak usage. Organizations must balance resource allocation to maintain optimal performance while controlling costs. The result is often complex capacity planning and deployment strategies that lead to either costly over-provisioning or risky under-provisioning—both of which compromise service quality.

Traditional node-coupled storage architectures require data replication across multiple nodes to maintain high availability and ensure robust fault tolerance. This architectural requirement multiplies storage and compute costs by a factor of 2-3x, depending on the replication configuration. The cascading effect of this design choice significantly amplifies the total storage footprint and associated costs, particularly as data volumes grow over time. Large-scale deployments are particularly affected, as storage costs often constitute a significant portion of the total infrastructure budget. This compounding cost factor becomes an ever-present burden for organizations attempting to scale.

The architecture enforces a fixed ratio between compute and storage resources, creating a rigid coupling that necessitates scaling the entire node, including storage capacity, when only additional compute power is required. This inflexible architectural constraint severely limits the ability to optimize resource allocation independently, resulting in inefficient resource utilization patterns and increased operational costs. The inability to scale compute resources separately from storage creates scenarios where organizations must over-provision entire nodes just to meet specific computational demands, resulting in wasted resources and increased expenses.

Is it possible to design a system that addresses the above challenges encountered with traditional search and analytics platforms?

YES!

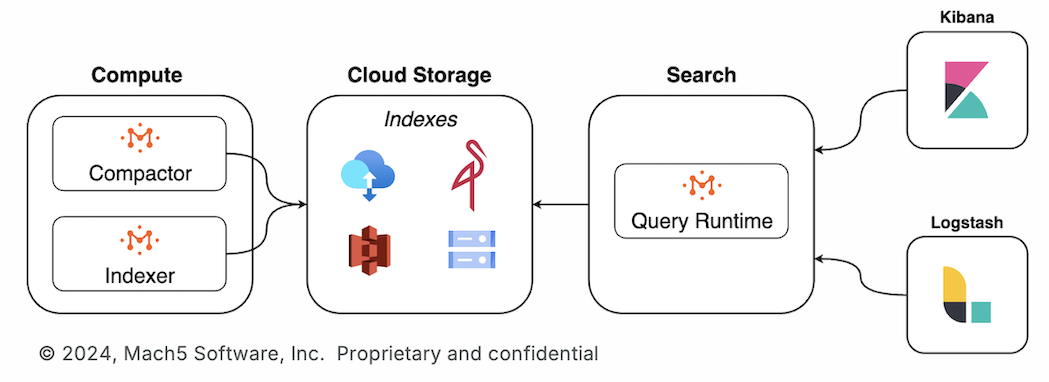

Mach5 answers this question with an innovative, disaggregated architecture powered by Kubernetes. While Mach5 leverages Kubernetes for autoscaling, its core advantages stem from two key architectural elements working in tandem:

This comprehensive architectural approach enables significant cost reductions—21x in total infrastructure costs and 30-45x lower storage costs per GB—which would be impossible to achieve by simply adding Kubernetes to a traditional search infrastructure. You can learn more about our architecture here

To better understand how this architecture transforms infrastructure management, let’s evaluate its impact on the same cost drivers:

Unlike traditional systems that rely on static peak estimates, Mach5 uses Kubernetes’ native autoscaling capabilities to orchestrate resources dynamically. The system continuously monitors usage patterns in real-time, automatically adjusting resources to match demand. This adaptive approach ensures sustained, high-performance operations while reducing infrastructure costs by at least a factor of 10. By allocating and deallocating resources in response to actual usage, the platform eliminates the need to maintain excess capacity during off-peak hours, delivering optimal efficiency at all times. This dynamic scalability ensures that organizations no longer need to compromise between cost and performance, delivering unmatched flexibility.

Mach5 leverages cloud object storage such as Amazon S3, Google Cloud Storage (GCS), Azure Blob Storage, and MinIO instead of conventional node-attached storage. By adopting this cloud-first architecture, the platform eliminates the costly replication overhead typically seen in traditional search solutions like OpenSearch and Elasticsearch. At the same time, it ensures robust data durability through the advanced redundancy mechanisms provided by these storage systems. This streamlined approach results in an extraordinary 30-45 X reduction in storage costs per gigabyte or raw data, offering unparalleled value and cost efficiency for organizations of all sizes.

The disaggregated architecture enhances resource management by enabling compute and storage to scale independently based on demand. This allows compute resources to be precisely matched to specific workloads, such as data ingestion or querying, without the need for over-provisioning. By isolating workloads and optimizing compute power for each task, the system ensures that resources are used efficiently and only when needed. This level of granularity not only improves performance but also drives significant cost savings, as organizations only pay for the compute capacity they actually use, eliminating the need for costly, underutilized infrastructure.

To understand the impact of these architectural choices, let’s compare the infrastructure costs of Mach5 and OpenSearch in a large-scale deployment scenario.

Here’s a breakdown of the average cost to store per GB, taking into account various replication scenarios:

| OpenSearch | Mach5 | |

|---|---|---|

| Space used | Raw data size * (1 + number of replicas) * 1.45 | Raw data size / 3 |

| Cost / GB - month | $0.08 | $0.023 |

| Total cost / GB(pri + 1 rep) | $0.232 | $0.0076 |

| Total cost / GB(pri + 2 rep) | $0.348 | $0.0076 |

The table reveals stark differences in storage efficiency and cost between OpenSearch deployments and Mach5's architecture:

Let’s take a closer look at the costs involved in a real-world deployment of OpenSearch vs Mach5:

| OpenSearch(Primary + 1 Replica) | Mach5 | |

|---|---|---|

| Stored data size | 696 TB | 80 TB |

| Machine type | i3.8xlarge.search ($3.994 / hour) | Search : i4i.8xlarge ($2.746 / hr) Ingestion : m6id.2xlarge ($0.4746 / hr) |

| # of machines | ~ 100 | 4 + 2 (ingestion) |

| Cost / hour (on-demand) | $399.4 | $11.9332 |

| Storage cost | Included | $2.616 / hour (S3 storage) |

| Mach5 Licensing cost | $36,864 | |

| Total cost / year | $3,498,744 | $127,451 + $36,864 = $164,315 |

The analysis confirms that Mach5 offers exceptional cost efficiency compared to traditional search infrastructures like OpenSearch and Elasticsearch. With its disaggregated storage architecture and dynamic resource scaling, Mach5 delivers:

These benefits stem from Mach5’s fundamental architectural advantages, including:

For organizations seeking to optimize search infrastructure costs while maintaining high performance, Mach5 provides a transformative solution that redefines cost efficiency at scale.

OpenSearch sizing: AWS OpenSearch Sizing Guide